Introduction

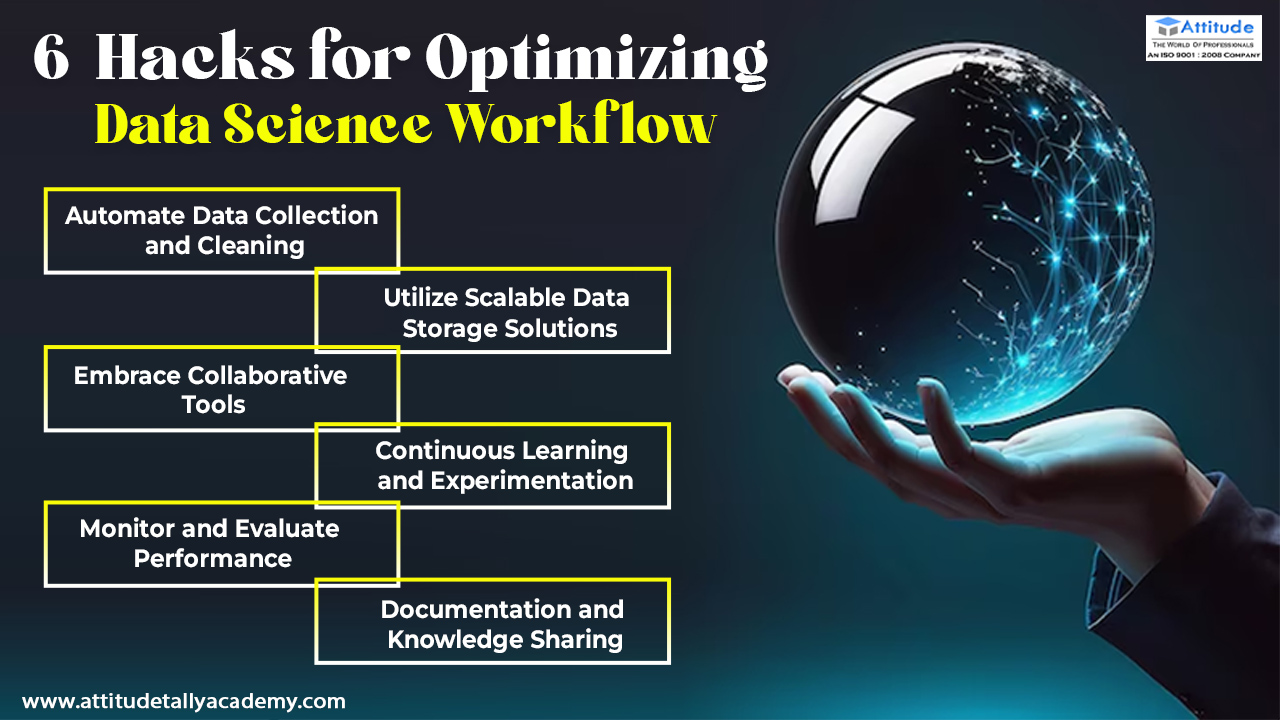

Data science is a rapidly evolving field, demanding efficient workflows to keep pace with the growing volume and complexity of data. Whether you’re a seasoned data scientist or just starting, streamlining your workflow can significantly enhance productivity and outcomes. Here are six essential hacks to optimize data science processes and boost your efficiency

- Automate Data Collection and Cleaning

Data collection and cleaning can be time-consuming tasks, but they are essential for accurate analysis. By automating these processes, you can save valuable time and reduce errors. Tools like Python scripts, R scripts, and data integration platforms can help you automate data collection from various sources. Additionally, libraries such as Pandas and NumPy can automate data cleaning tasks, ensuring your data is ready for analysis with minimal manual intervention.

- Utilize Scalable Data Storage Solutions

As your data grows, traditional storage solutions may become inefficient and costly. Embrace scalable data storage solutions like cloud-based storage (e.g., AWS, Google Cloud, Azure) and distributed databases (e.g., Hadoop, Apache Spark). These solutions provide flexibility, scalability, and cost-effectiveness, allowing you to handle large datasets efficiently and ensure smooth data processing and retrieval.

- Embrace Collaborative Tools

Data science is often a collaborative effort, involving multiple team members and stakeholders. Using collaborative tools such as Jupyter Notebooks, GitHub, and project management platforms (e.g., Jira, Trello) can enhance teamwork and streamline the workflow. These tools enable seamless code sharing, version control, and project tracking, ensuring everyone stays on the same page and contributes effectively.

- Continuous Learning and Experimentation

The field of data science is ever-evolving, with new techniques and tools emerging regularly. To stay ahead, foster a culture of continuous learning and experimentation within your team. Encourage team members to attend workshops, webinars, and conferences, and experiment with new methodologies and technologies. This approach not only keeps your team updated but also inspires innovation and improves problem-solving skills.

- Monitor and Evaluate Performance

Regularly monitoring and evaluating the performance of your data science models is crucial for ensuring their accuracy and reliability. Implement performance monitoring tools and metrics to track the effectiveness of your models. By identifying and addressing issues early, you can refine your models, improve their performance, and ensure they deliver accurate and actionable insights.

- Documentation and Knowledge Sharing

Proper documentation and knowledge sharing are vital for maintaining an efficient workflow. Document your processes, methodologies, and findings to create a knowledge base that can be referenced by your team. Encourage knowledge sharing through regular meetings, presentations, and collaborative platforms. This practice ensures that valuable insights and best practices are accessible to all team members, enhancing overall productivity.

Conclusion

Optimizing your data science workflow doesn’t have to be a daunting task. By implementing these six hacks—automating data collection and cleaning, utilizing scalable data storage solutions, embracing collaborative tools, fostering continuous learning and experimentation, monitoring and evaluating performance, and prioritizing documentation and knowledge sharing—you can streamline your processes and achieve greater efficiency. Start incorporating these strategies today and watch your data science projects reach new heights of success.

Remember, these Data Science Workflow Hacks will not only Optimize Data Science Processes but also drive better results and foster a more productive and innovative team environment.

Suggested Links